Well that's simply it.. there's no way to cool it properly. The limitations of thermal transfer is the main issue with these reduced die sizes, and until there's a new method of transfer, or a new die design that includes the cooler with liquid flowing through the substrates.. there's not much they can do. If you don't want the chip to burn up in the case of GPU failure, then you can easily set the throttle temperature to 70, 80, or whatever you'd like so that it will downvolt/downclock to avoid exceeding your set TJMAX.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Intel 13th Gen CPU thread

- Thread starter acroig

- Start date

the_sextein

Well-known member

Yeah I get you.

It's not really a GPU failure I'm worried about. The software will sometimes kick the GPU out if you are rendering an image series. If it doesn't properly clear the VRAM then when the scene updates to the next frame it can overflow the VRAM and kick the GPU from the program. Then it will switch to the CPU and run all cores at max load for hours until I can get to it. The CPU will throttle all day and it will damage it's lifespan.

I could lower the throttle temp but then I'm paying for performance that I can't actually get so it seems like a waste of cash at the high end.

I think AMD has been doing better with each new release in this regard as well as having less power draw in general. I think they can both do better and have been too focused on pushing an extra 100Mhz on a design that is pushed way past it's power efficiency sweet spot. Faster designs that don't need to be overclocked beyond the intended power level to compete would be nice for a change. Maybe the cost of modern chips is just getting too out of control?

I feel like AMD has been more professional in this regard. They are more likely to make a new design and stick to it. Intel has been very reluctant to let go of it's P cores or it's manufacturing and they have consistently overclocked their chips beyond the point of no return for the last 3 years.

I like Intel but I want them to do better and hope that they improve their product lines in the near future.

It's not really a GPU failure I'm worried about. The software will sometimes kick the GPU out if you are rendering an image series. If it doesn't properly clear the VRAM then when the scene updates to the next frame it can overflow the VRAM and kick the GPU from the program. Then it will switch to the CPU and run all cores at max load for hours until I can get to it. The CPU will throttle all day and it will damage it's lifespan.

I could lower the throttle temp but then I'm paying for performance that I can't actually get so it seems like a waste of cash at the high end.

I think AMD has been doing better with each new release in this regard as well as having less power draw in general. I think they can both do better and have been too focused on pushing an extra 100Mhz on a design that is pushed way past it's power efficiency sweet spot. Faster designs that don't need to be overclocked beyond the intended power level to compete would be nice for a change. Maybe the cost of modern chips is just getting too out of control?

I feel like AMD has been more professional in this regard. They are more likely to make a new design and stick to it. Intel has been very reluctant to let go of it's P cores or it's manufacturing and they have consistently overclocked their chips beyond the point of no return for the last 3 years.

I like Intel but I want them to do better and hope that they improve their product lines in the near future.

Last edited:

I don't know what you mean by "letting go of it's P cores". They're the ones ahead of the curve with the Big-Little design that is the way of the future. If anything, AMD hasn't let go of the "P core" old way of doing things.

Why would Intel let go of it's manufacturing? Makes no sense.. they may have fallen behind over the years but with the new factories and government incentives, every single American should be rooting for Intel to succeed in this aspect. I don't know about you, but we have seen the issues that come with TSMC having sole capability of manufacturing high-end nodes..

Things take time to correct the errors of past ownership. Rome wasn't built in a day. They're on the right track.

Why would Intel let go of it's manufacturing? Makes no sense.. they may have fallen behind over the years but with the new factories and government incentives, every single American should be rooting for Intel to succeed in this aspect. I don't know about you, but we have seen the issues that come with TSMC having sole capability of manufacturing high-end nodes..

Things take time to correct the errors of past ownership. Rome wasn't built in a day. They're on the right track.

the_sextein

Well-known member

I think you misunderstood me. I'm just hoping Intel improves it's current problematic situation as soon as possible. I understand it takes time and I'm certainly rooting for them to return to the top when it comes to manufacturing.

I'm just saying that right now they are relying on TSMC and don't have that advantage, in fact AMD is spending the money for a smaller, more advanced 5nm node and are less reluctant to make a new design which has swayed things in their favor.

Intel's E cores were a good idea and I think it's a winning design but Intel's larger P cores at the same frequency as AMD's large cores will consume more power and produce more heat because they are inefficient and need to be modernized. They need to do more to keep them out of the red so to speak.

I do agree they are on the correct path but I'm not confident in the 13900K like I was the 9900K. I'm not counting them out but I have severe doubts that the 13900K is going to be a chip that I want to own. It just doesn't look like it will be as efficient and it's performance looks to be similar to AMD. If AMD had a large performance disadvantage then the extra power and heat from Intel may be a consideration but if AMD can offer similar performance at better temps then I'm jumping ship until Intel either jumps down to the same silicon that AMD is on or they get their own manufacturing in top form again which will be a few years yet.

I'm just saying that right now they are relying on TSMC and don't have that advantage, in fact AMD is spending the money for a smaller, more advanced 5nm node and are less reluctant to make a new design which has swayed things in their favor.

Intel's E cores were a good idea and I think it's a winning design but Intel's larger P cores at the same frequency as AMD's large cores will consume more power and produce more heat because they are inefficient and need to be modernized. They need to do more to keep them out of the red so to speak.

I do agree they are on the correct path but I'm not confident in the 13900K like I was the 9900K. I'm not counting them out but I have severe doubts that the 13900K is going to be a chip that I want to own. It just doesn't look like it will be as efficient and it's performance looks to be similar to AMD. If AMD had a large performance disadvantage then the extra power and heat from Intel may be a consideration but if AMD can offer similar performance at better temps then I'm jumping ship until Intel either jumps down to the same silicon that AMD is on or they get their own manufacturing in top form again which will be a few years yet.

Intel has made chips that, despite being on worse/larger nodes, have performed equally or better.

Just like NV, Intel hasn't had to rely on a node advantage to perform up to par. If AMD didn't pay TSMC for the node advantage, I'd imagine they would be behind. Regardless.. the larger node helps Intel with thermal dissipation. I'm super curious how this 5nm node is going to work. AMD already struggled with 7nm.

Heat being produced isn't really the issue anymore at these node sizes, if you ask me. It's being able to dissipate it, and the smaller we go, the harder that is.

Alder Lake cores are "modernized". Raptor Lake should be a stepping stone, but the bigger leap will be Meteor Lake.

Just like NV, Intel hasn't had to rely on a node advantage to perform up to par. If AMD didn't pay TSMC for the node advantage, I'd imagine they would be behind. Regardless.. the larger node helps Intel with thermal dissipation. I'm super curious how this 5nm node is going to work. AMD already struggled with 7nm.

Heat being produced isn't really the issue anymore at these node sizes, if you ask me. It's being able to dissipate it, and the smaller we go, the harder that is.

Alder Lake cores are "modernized". Raptor Lake should be a stepping stone, but the bigger leap will be Meteor Lake.

the_sextein

Well-known member

I agree with that as well. Better designs always pay off regardless of what node they are on but Intel's IPC, cache, frequency are all being matched and the power and heat has been worse for these last few CPU series. The E cores could give Intel a multithread advantage but based on the 12900K I would say that advantage will not be a large one.

In terms of heat vs silicon size. It depends, sometimes it can be beneficial because you can power more transistors for less energy when they are packed closer together. On the other hand, you can get crosstalk or energy bleed and the heat that is produced is compact on a smaller surface and harder to dissipate. Still, as you will see with Nvidia's new 5nm node, they are going to make large gains without the temps changing much. More power comes from more cuda cores, higher frequencies but the node drop to 5nm is actually saving them power and heat and they are still capable of cooling it properly. People complain about power rise but it's still a very efficient design if you have doubled the throughput with a 50 watt increase in the power. If they improve the cooling design to keep the temps stable or even lower them like Nvidia did last time, then everything is good to go. I have seen a slick E core intro that has allowed Intel to keep pace or even surpass AMD in multicore workloads but frequency and IPC have not gone very far and considering the power and temps are already worse... They just seem locked up right now.

In terms of heat vs silicon size. It depends, sometimes it can be beneficial because you can power more transistors for less energy when they are packed closer together. On the other hand, you can get crosstalk or energy bleed and the heat that is produced is compact on a smaller surface and harder to dissipate. Still, as you will see with Nvidia's new 5nm node, they are going to make large gains without the temps changing much. More power comes from more cuda cores, higher frequencies but the node drop to 5nm is actually saving them power and heat and they are still capable of cooling it properly. People complain about power rise but it's still a very efficient design if you have doubled the throughput with a 50 watt increase in the power. If they improve the cooling design to keep the temps stable or even lower them like Nvidia did last time, then everything is good to go. I have seen a slick E core intro that has allowed Intel to keep pace or even surpass AMD in multicore workloads but frequency and IPC have not gone very far and considering the power and temps are already worse... They just seem locked up right now.

metroidfox

Well-known member

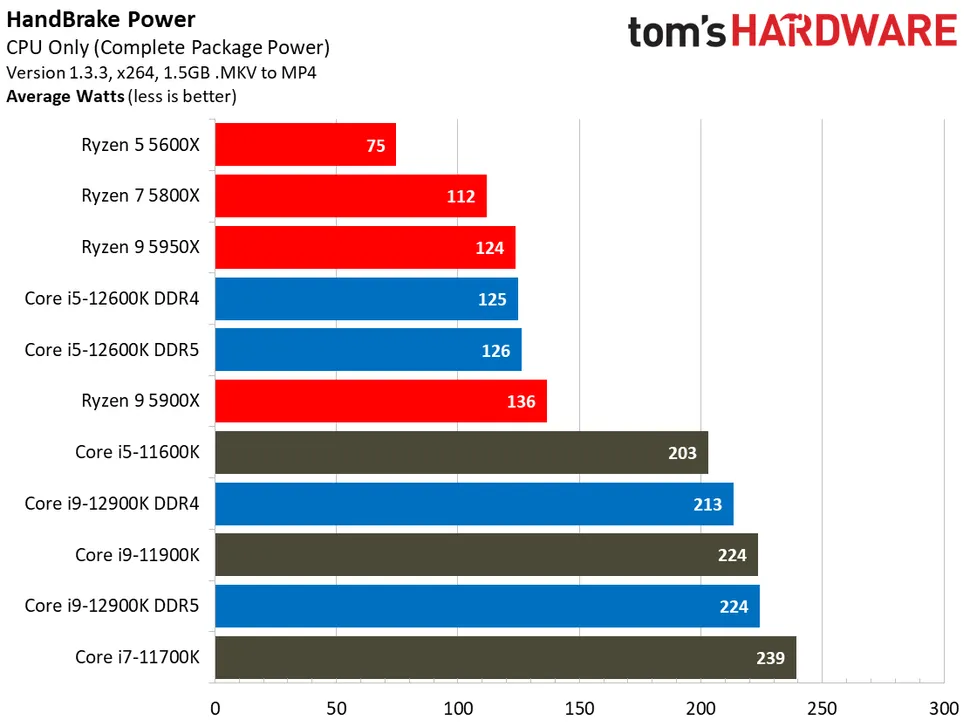

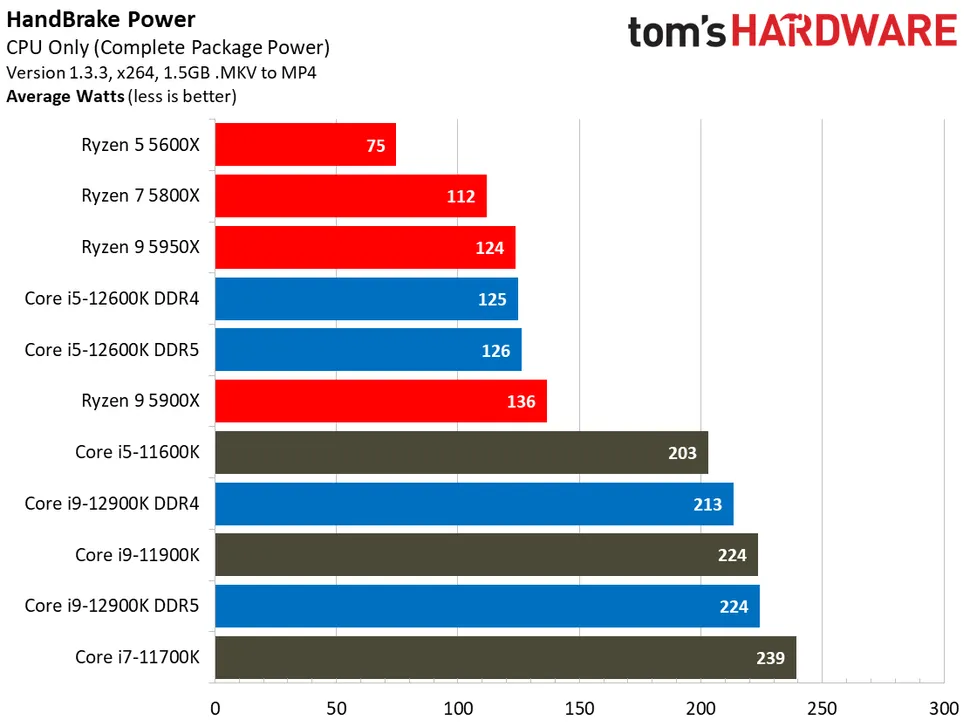

AMD's Ryzen 5xxx series are significantly more power efficient than either the 11th, 12th or 13th gen parts. Intel simply had poor processor design. Just like AMD did pre-Ryzen. (See below for example.)

Processors are also basically designed to take up as much cooling as you can throw at them. The 12th gen parts in particular scale all the way to 360mm water cooling solutions.

This isn't the mid-2000s any more. These parts are designed to stay at 90C.

The alternative is to do what Apple does with the M1 and M2, and throw a lot of processor area at the problem instead of scaling clock speeds. Personally I prefer this solution, but Intel and AMD clearly do not.

Hopefully competition changes things.

Processors are also basically designed to take up as much cooling as you can throw at them. The 12th gen parts in particular scale all the way to 360mm water cooling solutions.

This isn't the mid-2000s any more. These parts are designed to stay at 90C.

The alternative is to do what Apple does with the M1 and M2, and throw a lot of processor area at the problem instead of scaling clock speeds. Personally I prefer this solution, but Intel and AMD clearly do not.

Hopefully competition changes things.

the_sextein

Well-known member

AMD's core design is more modern in that is can do more with less frequency(IPC) for a variety of reasons. The more complex the core, the more you reap from node drops. Intel probably knows that improvements from the silicon are about to hit a brick wall so they didn't bother to update it. They have always had the headroom to push more frequency but that has clearly hit a wall now that cores have passed beyond 8 which forced them to make E cores but the big cores remain behind AMD. It will be interesting to see how AMD's new cores will perform vs intel now that they have reached parity with Intel in frequency.

Regardless of performance I think Intel needs to do something about it's power problems. AMD isn't out of the forest yet either, we have yet to see how the 7000 series will fare when it comes to heat now that they are pushing 5.5Ghz+. It looks to me that the socket max is lower than Intel's power restrictions which tell me that AMD still has a power advantage and probably a decent size one.

Regardless of performance I think Intel needs to do something about it's power problems. AMD isn't out of the forest yet either, we have yet to see how the 7000 series will fare when it comes to heat now that they are pushing 5.5Ghz+. It looks to me that the socket max is lower than Intel's power restrictions which tell me that AMD still has a power advantage and probably a decent size one.

Last edited:

acroig

Just another Troll

This isn't the mid-2000s any more. These parts are designed to stay at 90C.

Is that really the case? I see throttling on a laptop AMD 5800 CPU at 75C.

the_sextein

Well-known member

Modern electronics are designed and capable of running at higher temps then they were in the 1990's. 90C should NOT be ok with anyone for extended periods of time. I don't care what they say it is not sustainable.

I remember when it was ok if the CPU went into the upper 60's under full load. Then it was ok if it hit upper 70's as long as it was not sustained. Then it hit 80's and people just started b!tching and then it hit 90's and it would seam that the industry has gone off the rails. Intel hit 100C on the last one and since I use my CPU for more than just gaming or running a benchmark a couple of times when I build the system, I've drawn a line that I won't cross and that is 90C. If these chips peak into the 90's then I'm not buying them. They run all day long and that is a fire hazard.

I remember when it was ok if the CPU went into the upper 60's under full load. Then it was ok if it hit upper 70's as long as it was not sustained. Then it hit 80's and people just started b!tching and then it hit 90's and it would seam that the industry has gone off the rails. Intel hit 100C on the last one and since I use my CPU for more than just gaming or running a benchmark a couple of times when I build the system, I've drawn a line that I won't cross and that is 90C. If these chips peak into the 90's then I'm not buying them. They run all day long and that is a fire hazard.

Last edited:

the_sextein

Well-known member

https://wccftech.com/intel-core-i9-...-than-12900k-5950x-5800x3d-in-aots-benchmark/

Can't complain about those gains. Intel is pushing forward hard and giving much more than they have in a long time.

Can't complain about those gains. Intel is pushing forward hard and giving much more than they have in a long time.

acroig

Just another Troll

Intel Core i7-13700K 16 Core Raptor Lake CPU Specs

The Intel Core i7-13700K CPU will be the fastest 13th Gen Core i7 chip on offer within the Raptor Lake CPU lineup. The chip features a total of 16 cores and 24 threads. This configuration is made possible with 8 P-Cores based on the Raptor Cove architecture and 8 E-Cores based on the Grace Mont core architecture. The CPU comes with 30 MB of L3 cache and 24 MB of L2 cache for a total combined 54 MB cache. The chip was running at a base clock of 3.4 GHz and a boost clock of 5.40 GHz. The all-core boost is rated at 5.3 GHz for the P-Cores while the E-Cores feature a base clock of 3.4 GHz and a boost clock of 4.3 GHz.

This is the chip that AMD has to best for me.

The Intel Core i7-13700K CPU will be the fastest 13th Gen Core i7 chip on offer within the Raptor Lake CPU lineup. The chip features a total of 16 cores and 24 threads. This configuration is made possible with 8 P-Cores based on the Raptor Cove architecture and 8 E-Cores based on the Grace Mont core architecture. The CPU comes with 30 MB of L3 cache and 24 MB of L2 cache for a total combined 54 MB cache. The chip was running at a base clock of 3.4 GHz and a boost clock of 5.40 GHz. The all-core boost is rated at 5.3 GHz for the P-Cores while the E-Cores feature a base clock of 3.4 GHz and a boost clock of 4.3 GHz.

This is the chip that AMD has to best for me.

Boost Frequencies of the All-important Core i5-13400 and i5-13500 Revealed

Boost Frequencies of the All-important Core i5-13400 and i5-13500 Revealed

The new Intel processors have maximum turbo frequencies of 4.10 GHz and 4.5 GHz, respectively.

Source: techPowerUp!

Boost Frequencies of the All-important Core i5-13400 and i5-13500 Revealed

The new Intel processors have maximum turbo frequencies of 4.10 GHz and 4.5 GHz, respectively.

Maximum boost frequencies of the Core i5-13400 and i5-13500 surfaced on the web thanks to Passmark screenshots scored by TUM_APISAK. Boost frequencies of 13th Gen Core processors weren't part of the recent lineup leak. The i5-13400 has a maximum boost frequency of 4.10 GHz, while the i5-13500 comes with 4.50 GHz. Both SKUs have an identical base frequency of 2.50 GHz. The maximum turbo frequency of 4.10 GHz for the i5-13400 is significantly lower than the 5.80 GHz of the flagship i9-13900K, and the 5.10 GHz of the i5-13600K. It's also quite spaced apart from the i5-13500, with its 4.50 GHz. Perhaps Intel really wants some consumer interest in the Core i5 SKUs positioned between the i5-13400 and the i5-13600K.

Source: techPowerUp!

Intel Core i9-13900 (non-K) Spotted with 5.60 GHz Max Boost, Benchmarked

Intel Core i9-13900 (non-K) Spotted with 5.60 GHz Max Boost, Benchmarked

In contrast to the Core i9-13900K, the non-K version has a locked multiplier and a lower maximum turbo frequency.

Source: techPowerUp!

Intel Core i9-13900 (non-K) Spotted with 5.60 GHz Max Boost, Benchmarked

In contrast to the Core i9-13900K, the non-K version has a locked multiplier and a lower maximum turbo frequency.

Tested in Geekbench 5.4.5, the i9-13900 scores 2130 points in the single-threaded test, and 20131 points in the multi-threaded one. Wccftech tabulated these scores in comparison to the current-gen flagship i9-12900K. The i9-13900 ends up 10 percent faster than the i9-12900K in the single-threaded test, and 17 percent faster in the multi-threaded. The single-threaded uplift is thanks to the higher IPC of the "Raptor Cove" P-core, and slightly higher boost clock; while the multi-threaded score is helped not just by the higher IPC, but also the addition of 8 more E-cores.

Source: techPowerUp!